Quantified Self Meets Virtual Reality

This week I’ve had a few of my interests intersect – the Quantified Self, virtual reality, biofeedback / neurofeedback, and consciousness. Earlier this year I came across the Oculus Rift , a next gen virtual reality headset, that looks to be a game changer (no pun intended). Like many people fascinated and growing up with technology in the 90s, virtual reality was the hot thing that seemed like it was going to emerge almost any year now. Most people that saw the Lawnmower Man were probably simultaneously fascinated with the possibilities of VR, and also had some false expectations set for how far along technology really was. I remember in 1998 I was given a private tour of a virtual reality arcade that hadn’t been opened to the public yet. They had full head gear, and even a body gyro that looked like it was straight out of the movies. Sadly, I don’t think it lasted too long commercially, and like most things VR there hasn’t been too much commercial advancement since. Well, at least until the Oculus Rift.

I think that’s going to change with the Rift (see how The Oculus Rift Dev Community is Collaborating to Change The World). Although it’s not commercially available (as in ready for prime-time consumer use), you can order a developer kit with a headset that is pretty amazing. Sure, it still isn’t 1080p and the resolution is limited to 1280×800 (640×800 per eye), and there aren’t a ton of games or apps out there yet, BUT it is the wild west for VR and things are going to get interesting very fast!

So far I’ve experienced two games since I received my Rift the other day. The first, was the Tuscany demo where you get to walk around a beautiful, serene landscape and explore the virtual world first hand. Although the graphics may not blow you away at first glance, the experience is definitely immersive. It’s a very cool feeling to be able to look up, down, and over your shoulder and feel immersed inside a landscape. I love this video of a 90 year old grandma experiencing Tuscany and VR for the first time.

The second game I’ve played with is the famous Half Life 2 (available on Steam, and support the Occulus Rift by adding a simple -vr to the command line option). Although Tuscany is serene and beautiful, the graphics are a little pixelated (given the Rift is currently a dev model). Jumping inside Half Life 2 is nothing short of amazing. Walking around and looking up at the sky and having one of the drones fly overhead and photograph you is a little unsettling! It feels very real and although I didn’t experience any VR sickness I did have to take a break after 15-20 minutes.

So far this sounds great and could make for some novel gaming. But, what does this have to do with Quantified Self, Biofeedback, and Consciousness. I’ve been thinking about some of these ideas for some time, and some may seem out there, but here are few ideas for future projects:

Virtual Biofeedback

Visualizing biofeedback (heart rate, activity levels, etc) and neurofeedback (EEG/brainwave data) is powerful. Imagine being able to explore in a 3D, immersive, and tactile fashion.

Experiment #1 – Visualize your heartbeat and induce an OBE

OK, this admittedly sounds OUT there, but is quite interesting. A few months ago a came across an article on Synchronized Virtual Reality Heartbeat Triggers Out of Body Experience.

“New research demonstrates that triggering an out-of-body experience (OBE) could be as simple as getting a person to watch a video of themselves with their heartbeat projected onto it. According to the study, it’s easy to trick the mind into thinking it belongs to an external body and manipulate a person’s self-consciousness by externalizing the body’s internal rhythms. The findings could lead to new treatments for people with perceptual disorders such as anorexia and could also help dieters too.”

The idea would be to create a 3D model of yourself, or at least a human avatar, detect heart rate using one of the many heart rate monitors I have or ideally find out how to get data real-time off of my Basis watch. It would be cool to have a virtual heart beating in synchronous rhythm to your own, and provide the flashing outline of the avatar to simulate the experiment and trigger the bizarre, but scientifically reproducible OBE done in the studies. I think visualizing the heart beat externally would be a cool experiment in of itself, but the perceptive and consciousness impacts of the experiment would be interesting to try. I’ve reached out to the creator of the experiment, Anna Mikulak, to get the original article PDF and details on the study. And, have also been checking out some of the work by Jane E. Aspell, who has done similar experiments (Keeping in Touch with One’s Self: Multisensory Mechanisms of Self-Consciousness)

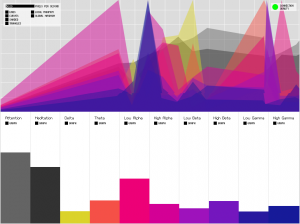

Experiment #2 – Visualize your EEG data

A couple years ago I created a couple apps for the NeuroSky EEG headset for visualizing your brainwave data (Beta, Alpha, Theta, and Delta) and downloading to CSV. See the Processing Brain Grapher and MindStream. Wouldn’t it be cool to visualize this in a 3D setting? This also sounds out there but I had a dream about this last year. Imagine being able to visualize and immerse yourself in a 3D representation of your brainwave data, and attempt to alter your states.

One basic approach is to relax and see the impact on your focus and attention levels. One whacky idea is to experiment to see how brainwave entrainment audio has on your brainwaves. Wouldn’t it be cool to have a console in your virtual world where you could turn a few knobs and adjust the audio and binaural beats?

Random Ideas

- If you collect massive amounts of geolocation data (latitude/longitude), imagine having a Google Streetview experience where you can see where you been. BUT, being able to jump to your top locations and even add contextual overlays like heatmaps.

- Create 3D virtual models of your favorite places or through image and video data you’ve collected through lifelogging gadgets (think Gordon Bell’s SenseCam). Creating virtual models isn’t exactly a piece of cake if it isn’t your area of expertise. But, there are some technologies out there that are making progress with analyzing your photos and videos and creating 3D representations with relatively little work. See VideoTrace.

- Create a timeline and walkthrough where you can visualize metrics (activity level, heart rate, blood work, 23andMe data) and actually interact with this data as objects. Again, this may just sound like a novelty, but there may be some practical use in creating and exploring a three-dimensional (fourth if you add time) archive of your biometric data.

- Make a tangible, virtual memory palace. Have you heard of the memory palace technique for easily recalling memories and using the brain’s spatial skills to allow you to remember facts, faces, places and things. Why not create an ACTUAL virtual room or castle where you can place your lifelogging events – pictures, memories, knowledge, etc. and make things easier to recall?

- Build your own life-like virtual double. This might sound uncanny, but a company Infinite Realities is already developing and using gigapixel scanning technology to create amazingly detailed virtual representations of people – http://ir-ltd.net/#about

One Response

I am waiting for my Oculus to arrive and want to try it with the Neurosky as well.

Have you tried using them both together and is it still possible to get a decent signal from the mindset when you have the OR on ?